Global Releasers

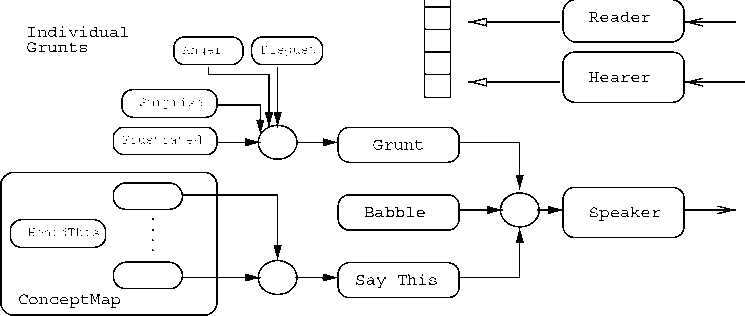

Figure 2: Overall architecture of Kismet’s protoverbal behaviors, where rounded boxes

represent instances of behaviors and circles represent connections between behaviors. Con-

nections between HeardThis and individual Concepts are not shown for clarity (see section

5.1

will say next. A single vocal behavior does not de-

pend on the operation of others. Behaviors may

communicate indirectly by receiving each other’s

confidence signal as input.

• Goal-directedness. The goal of each vocal be-

havior is to assuage the robot’s Speech drive by

making it say something. Different kinds of vo-

calizations may satisfy different drives, e.g., the

Exploration drive grows when there are no people

present in the robot’s environment, and is satis-

fied by canonical babbling.

• Competition. The competition between vocal

behaviors is regulated by a priority scheme in the

Lateral architecture (Fitzpatrick, 1997). Each

behavior assigns its own priority locally to its

computed activation level. Implementation de-

tails can be found in (Varchavskaia, 2002); they

correspond closely to the original implementation

of behavior activation on Kismet.

The system consists of grunting behaviors, a single

canonical babbling behavior, and a number of con-

cept behaviors (this number may grow or shrink at

runtime). All of the above execute and interact in

the overall architectural framework shown in Figure

2. There are two specialized behaviors, Reader and

Hearer, which interface with Kismet’s perceptual sys-

tem and procure global releasers for vocal behaviors.

A single Speaker behavior is responsible for sending

a speech request over to the robot.

All of the protoverbal behaviors (rounded boxes

on the figure) have access to the global releasers and

the currently heard string of phonemes.

The nature of the speech request is determined by

competition among individual protoverbal behaviors

implemented through the Lateral priority scheme.

To that effect, relevant behaviors write their vocal

labels to connection ob jects, shown as small circles

on the figure. The behavior with the highest activa-

tion, and therefore the highest priority, will succeed

in overwriting all other request strings with its own,

which Speaker will end up passing on. A speech re-

quest may be a request for a grunt, a babble, or a

“word” - i.e., a phonemic string that is attached to

one of the concept behaviors. Competition happens

in two stages. First, the most active grunt writes its

request to the Grunt buffer and the most active con-

cept writes its label to the Say This buffer. Then,

the most active of the three types of request buffers

writes its output to Speaker. Any of these behav-

iors only produce output when activation is above a

threshold (determined empirically), so some of the

time, the Protolanguage Module does not produce

an output, and the robot remains silent.

The vocal behaviors are influenced by data on the

robot’s current perceptual, emotional, and behav-

ioral state. Figure 4 represents the way data and

control flow between existing software components

of Kismet’s architecture and the vocal behaviors de-

veloped here.

The Perception, Behavior, and Motor Systems

communicate the current values of Simple Releasers,

implemented as variables of global scope, to which

any component of Vocal Behaviors has access.

Complex Releasers are computed by combining in-

formation from these and also become inputs to the

new Vocal Behaviors. Finally, the outputs of the sys-

tem are written directly to the speech stream, over-

writing any existing value with the one determined

by Vocal Behaviors and requesting a new speech act.

The entire protoverbal system shown on the right

of figure 4 includes the implementation of algorithms

for concept and vocal label acquisition and updates.

More intriguing information

1. Permanent and Transitory Policy Shocks in an Empirical Macro Model with Asymmetric Information2. Why unwinding preferences is not the same as liberalisation: the case of sugar

3. Volunteering and the Strategic Value of Ignorance

4. The name is absent

5. Exchange Rate Uncertainty and Trade Growth - A Comparison of Linear and Nonlinear (Forecasting) Models

6. Behavior-Based Early Language Development on a Humanoid Robot

7. The name is absent

8. The Impact of Individual Investment Behavior for Retirement Welfare: Evidence from the United States and Germany

9. The name is absent

10. THE ANDEAN PRICE BAND SYSTEM: EFFECTS ON PRICES, PROTECTION AND PRODUCER WELFARE