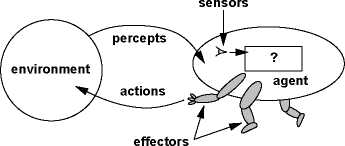

Figure 2: The abstract model of a traditional agent,

which perceives the external environment and acts on it

(adapted from (Russell and Norvig, 1995)). The source

of perception and the target of action do not include the

agent brain representation.

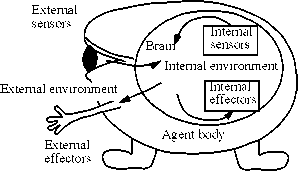

Figure 3: A self-aware self-effecting (SASE) agent. It

interacts with not only the external environment but also

its own internal (brain) environment: the representation

of the brain itself.

3. A theory for mentally developing

robots

Evolving with the above robot pro jects is a theoretic

framework for autonomous mental development of

robots. We present the major components of this

theory here. For more details, the reader is referred

to (Weng, 2002).

3.1 SASE Agents

Defined in the standard AI literature (see, e.g., an ex-

cellent textbook (Russell and Norvig, 1995) and an

excellent survey (Franklin, 1997)), an agent is some-

thing that senses and acts, whose abstract model is

shown in Fig. 2. As shown, the environment E of an

agent is the world outside the agent.

To be precise in our further discussion, we need

some mathematical notation. A context of an agent

is a stochastic process (Papoulis, 1976), denoted by

g(t). It consists of two parts g(t) = (x(t), a(t)),

where x(t) denotes the sensory vector at time t which

collects all signals (values) sensed by the sensors of

the agent at time t, a(t) the effector vector consist-

ing of all the signals sent to the effectors of the agent

at time t. The context of the agent from the time t1

(when the agent is turned on) up to a later time t2 is a

realization of the random process {g(t) | t1 ≤ t ≤ t2}.

Similarly, we call {x(t) | t1 ≤ t ≤ t2} a sensory con-

text and {a(t) | t1 ≤ t ≤ t2} an action context.

The set of all the possible contexts of an environ-

ment E is called the context domain D. As indicated

by Fig. 2, at each time t, the agent senses vector x(t)

from the environment using its sensors and it sends

a(t) as action to its effectors. Typically, at any time

t the agent uses only a subset of the history repre-

sented in the context, since only a subset is mostly

related to the current action.

The model in Fig. 2 is for an agent that perceives

only the external environment and acts on the exter-

nal environment. Such agents range from a simple

thermostat to a complex space shuttle. This well ac-

cepted model played an important role in agent re-

search and applications. Unfortunately, this model

has a fundamental flaw: It does not sense its internal

“brain” activities. In other words, its internal deci-

sion process is neither a target of its own cognition

nor a subject for the agent to explain.

The human brain allows the thinker to sense

what he is thinking about without performing an

overt action. For example, visual attention is a

self-aware self-effecting internal action (see, e.g.,

(Kandel et al., 1991), pp. 396 - 403). Motivated by

neuroscience, it is proposed here that a highly in-

telligent being must be self-aware and self-effecting

(SASE). Fig. 3 shows an illustration of a SASE agent.

A formal definition of a SASE agent is as follows:

Definition 1 A self-aware and self-effecting

(SASE) agent has internal sensors and internal

effectors. In addition to interacting with the ex-

ternal environment, it senses some of its internal

representation as a part of its perceptual process and

it generates actions for its internal effectors as a

part of its action process.

Using this new agent model, the sensory context x(t)

of a SASE agent must contain information about not

only external environment E, but also internal rep-

resentation R. Further, the action context a(t) of a

SASE agent must include internal effectors that act

on R.

A traditional non-SASE agent does use internal

representation R to make decision. However, this

decision process and the internal representation R is

not included in what is to be sensed, perceived, rec-

ognized, discriminated, understood and explained by

the agent itself. Thus, a non-SASE agent is not able

to understand what it is doing, or in other words,

it is not self-aware. Further, the behaviors that it

generates are for the external world only, not for the

brain itself. Thus, it is not able to autonomously

change its internal decision steps either. For exam-

ple, it is not able to modify its value system based on

its experience about what is good and what is bad.

It is important to note that not all the internal

brain representations are sensed by the brain itself.

For example, we cannot sense why we have interest-

ing visual illusions (Eagleman, 2001).

More intriguing information

1. Government spending composition, technical change and wage inequality2. The name is absent

3. Une Classe de Concepts

4. The name is absent

5. Are Public Investment Efficient in Creating Capital Stocks in Developing Countries?

6. How we might be able to understand the brain

7. A Principal Components Approach to Cross-Section Dependence in Panels

8. The name is absent

9. The name is absent

10. Knowledge, Innovation and Agglomeration - regionalized multiple indicators and evidence from Brazil