fact, all the conscious internal effectors should have

corresponding internal sensors.

It seems that a developmental program should use

a distributed representation, because the tasks are

unknown at the robot programming time. It is nat-

ural that the representation in earlier processing is

very much sensor centered and the representation

in later processing is very much effector centered.

Learned associations map perceptually very different

sensory inputs to the same equivalent class of actions.

This is because a developmental being is shaped by

the environment to produce such a desired behavior.

On the other hand, an effector centered represen-

tation can correspond to a world ob ject well. For

example, when the eyes of a child sense (see) his fa-

ther’s portrait and his ears sense (hear) a question

“who is he?” The internally primed action can be

any of the following actions: saying “he is my fa-

ther,” “my dad,” “my daddy,” etc. In this example,

the later action representation can correspond to a

world object, “father,” but it is still a (body cen-

tered) distributed representation. Further, since the

generated actions are not unique given different sen-

sory inputs of the same object, there is no place for

the brain (human or robot) to arrive at a unique rep-

resentation from a wide variety of sensory contexts

that reflects the world that contains the same single

object as well as others. For example, there is no way

for the brain to arrive at a unique representation in

the above “father” example. Therefore, a symbolic

representation is not suited for a developmental pro-

gram while a distributed representation is.

4. SAIL - An example of developmen-

tal robots

The SAIL robot is our current autonomous devel-

opmental process test-bed. It is a human-size mo-

bile robot house-made at Michigan State University

with a drive-base, a six-joint robot arm, a rotary

neck, and two pan-tilt units, on which two CCD cam-

eras (as eyes) are mounted. A wireless microphone

functions as an ear. The SAIL robot has four pres-

sure sensors on its torso and 28 touch sensors on its

eyes, arm, neck, and bumper. Its main computer is

a dual-processor dual-bus PC workstation with 512

MB RAM and a 27 GB three-drive disk array. All

the sensory information processing, memory recall

and update as well as real-time effector controls are

done in real-time.

According to the theory presented in Section 3.,

our SAIL developmental algorithm has some “in-

nate” reflexive behaviors built-in. At the “birth”

time of the SAIL robot, its developmental algorithm

starts to run. This developmental algorithm runs in

real time, through the entire “life span” of the robot.

In other words, the design of the developmental pro-

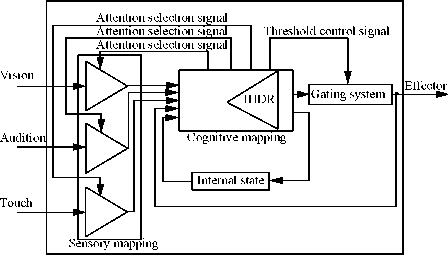

Figure 5: Partial internal architecture of a single level in

the SAIL developmental program.

gram cannot be changed once the robot is “born,”

no matter what tasks that it ends up learning. The

robot learns while performing simultaneously. The

innate reflexive behaviors enable it to explore the

environment while improving its skills. The human

trainers train the robot by interacting with it, very

much like the way human parents interact with their

infant, letting it seeing around, demonstrating how

to reaching objects, teaching commands with the re-

quired responses, delivering reward or punishment

(pressing “good” or “bad” buttons on the robot),

etc. The SAIL developmental algorithm updates the

robot memory in real-time according to what was

sensed by the sensors, what it did, and what it re-

ceived as feedback from the human trainers.

4.1 Architecture

The schematic architecture of a single level of SAIL is

shown in Fig. 5. Sensory inputs first enter a module

called sensory mapping, whose detailed structure is

discussed in Section 4.2.

Internal attention for vision, audition and touch, is

a very important mechanism for the success of mul-

timodal sensing. A major challenge of perception for

high dimensional data inputs such as vision, audition

and touch is that often not all the lines in the input

are related to the task at hand. Attention selection

enables singles of only a bundle of relevant lines are

selected for passing through while others are blocked.

Attention selection is an internal effector since it acts

on the internal structure of the “brain” instead of the

external environment.

First, each sensing modality, vision, audition and

touch, needs intra-modal attention to select a sub-

set of internal output lines for further processing but

disregard to leaving unrelated other lines. Second,

the inter-modal attention, which selects a single or

multiple modalities for attention. Attention is neces-

sary because not only do our processors have only a

limited computational power, but more importantly,

focusing on only related inputs enables powerful gen-

eralization.

More intriguing information

1. The name is absent2. Altruism and fairness in a public pension system

3. The economic doctrines in the wine trade and wine production sectors: the case of Bastiat and the Port wine sector: 1850-1908

4. Concerns for Equity and the Optimal Co-Payments for Publicly Provided Health Care

5. Rent Dissipation in Chartered Recreational Fishing: Inside the Black Box

6. Ongoing Emergence: A Core Concept in Epigenetic Robotics

7. Crime as a Social Cost of Poverty and Inequality: A Review Focusing on Developing Countries

8. Micro-strategies of Contextualization Cross-national Transfer of Socially Responsible Investment

9. Incorporating global skills within UK higher education of engineers

10. The name is absent