Provided by Cognitive Sciences ePrint Archive

AN IMPROVED 2D OPTICAL FLOW SENSOR FOR MOTION SEGMENTATION

Alan A. Stocker

Institute of Neuroinformatics

University and ETH Ziirich

Winterthurerstrasse 190

8057 Zurich, Switzerland

ABSTRACT

A functional focal-plane implementation of a 2D optical

flow system is presented that detects an preserves motion

discontinuities. The system is composed of two different

network layers of analog computational units arranged in

a retinotopical order. The units in the first layer (the op-

tical flow network) estimate the local optical flow field in

two visual dimensions, where the strength of their nearest-

neighbor connections determines the amount of motion in-

tegration. Whereas in an earlier implementation [1] the con-

nection strength was set constant in the complete image

space, it is now dynamically and locally controlled by the

second network layer (the motion discontinuities network)

that is recurrently connected to the optical flow network.

The connection strengths in the optical flow network are

modulated such that visual motion integration is ideally only

facilitated within image areas that are likely to represent

common motion sources. Results of an experimental aVLSI

chip illustrate the potential of the approach and its function-

ality under real-world conditions.

1. MOTIVATION

The knowledge of visual motion is valuable for a cogni-

tive description of the environment which is a requisite for

any intelligent behavior. Optical flow is a dense represen-

tation of visual motion. Such a representation naturally

favors an equivalent computational architecture where an

array of identical, retinotopically arranged computational

units processes in parallel the optical flow at each image

location. Successful aVLSI implementations of such archi-

tectures have been reported (see e.g.[2]) that demonstrated

real-time processing performance in extracting optical flow.

Although local visual motion information is sufficient for

many applications, its inherent ambiguity (which is e.g. ex-

pressed as the aperture problem) makes the purely local

This work was supported by the Swiss National Science Foundation

and the Korber Foundation.

(normal) optical flow estimate of these processors unreli-

able and often incorrect.

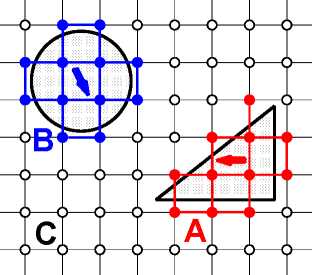

Fig. 1. Different motion sources and their appropriate

regions-of-support. Three different motion sources are in-

duced by two moving objects and the background. The col-

lective computation is ideally restricted to the isolated sets

of processing units A, B (objects) and C (background).

The estimation quality can be increased significantly if

visual motion information is spatially integrated. In [3], a

motion chip that globally integrates and thus performs a col-

lective estimation of visual motion amongst all the units in

the complete image space, is presented. If multiple mo-

tion sources1 are present, however, such a global estimate

becomes meaningless. Earlier, we presented an improved

focal-plane processor that restricts collective computation

to smooth isotropic kernels of variable size, resulting in a

smooth optical flow estimate [1]. Ideally, integration should

be limited to the extents of the individual motion sources.

Such a scheme, as illustrated in Figure 1, provides an opti-

mal optical flow estimate but requires the processing array

to be able to connect and separate groups of units dynam-

ically. Resistive network architectures applying such dy-

namical linking have been proposed before [4]. However, to

our knowledge there exists only one attempt to implement

such an approach [5]. In this one-dimensional processing

1 e.g. a single moving object on a stationary but structured background

0-7803-7448-7/02/$17.00 ©2002 IEEE

II - 332

More intriguing information

1. An Estimated DSGE Model of the Indian Economy.2. REVITALIZING FAMILY FARM AGRICULTURE

3. BUSINESS SUCCESS: WHAT FACTORS REALLY MATTER?

4. The name is absent

5. Evaluation of the Development Potential of Russian Cities

6. Barriers and Limitations in the Development of Industrial Innovation in the Region

7. Correlation Analysis of Financial Contagion: What One Should Know Before Running a Test

8. Making International Human Rights Protection More Effective: A Rational-Choice Approach to the Effectiveness of Ius Standi Provisions

9. The name is absent

10. Volunteering and the Strategic Value of Ignorance