composed of a multilayer network of maps in a pyrami-

dal structure. The layers of CD build representations by

detecting regularities in the preceeding ones.

• Learned Representations (LR) receives inputs

from CD and itself. This sub-system has the properties

of an associative memory. It is responsible for learn-

ing of new skills and determines the global system be-

haviour. Each neuron of LR is connected to GE for cri-

teria evaluation. We define representations as the set of

active columns in LR at time t.

• Elementary Actions and Action Synthesis (AS

and ACT) are the action sub-systems. AS produces com-

binations of elementary actions. Its outputs are connected

to ACT. ACT is the interface between the neuron/pulse

space and the environment. It is a one-layer set of maps

where each map drives a degree of freedom of the effec-

tors in a multi-scale way.

• Global Effects (GE) is a scoring system which as-

sociates a value with each representation. We call effects

the score obtained through a representation. GE repre-

sents the criterion the system wants to maximize. When

the effects of a representation are negative the system will

produce actions to increase the criterion value.

3. Developmental properties

The system is able to detect spatial and temporal invari-

ants, and to produce new actions. We will provide here an

example of detection of spatial invariants. Patterns are ex-

tracted from images and grouped in classes according to

an invariance criterion - which will be related to shapes in

the environment. SEN neuron potentials are composed of

images of projections of these shapes. CD extracts what

is common or different among the features that compose

them.

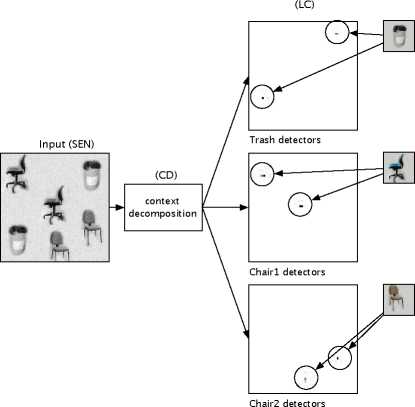

Figure 2 shows a simple result where 3 distinct objects

are presented to the system. After decomposing the im-

age into elementary features, three LR maps are learned

to detect and localize each particular object. This exam-

ple only uses the spatial competition property. The im-

ages of potentials are decomposed by applying groups

of competitive filters systematically and simultaneously

on each part of the images. The layered architecture of

CD permits to repeat this process across the structure so

that the neurons at the top of CD correspond to a recep-

tive field as large as the whole image. At this level of

representation, input images have been diffracted in the

sub-system, decomposed in spatial frequencies and pat-

tern contents, and recomposed in more complex struc-

tures. This ”decomposition and recomposition” evolves

across time and converges toward a stable state. All the

learned weight kernels are based on the image frequen-

cies and feature contents. The activity of the high end

neurons of CD provides this distributed representation of

the environment.

Figure 2: Simple example of spatial competition among neu-

rons of different maps of the same layer. In LR layer, burst

state are represented by grey level. White means no discharge.

The system is able to discriminate and localize the two kinds

of chairs and the trash bin from 128x128 input image. In this

example, the system includes 20 480 neurons and 13 922 304

synapses. The extraction stability is obtained after 500 time

steps (less than a minute on SunBlade 100 workstation) while

recognition duration is much smaller (about 12Hz in the same

conditions).

4. Conclusion

Implementation of this architecture is in progress and first

experiments are underway with a Nomadics XR 4000 and

a six-legged robots for the elaboration of new behaviors.

References

Gerstner, W. and Kistler, W. (2002). Mathematical for-

mulations of hebbian learning. Biological Cybernet-

ics, 87:404-415.

Harnad, S. (1990). The symbol grounding problem.

Physica D, 42:335-346.

MacDorman, K. F., Tatani, K., Miyazaki, Y., and Koeda,

M. (2001). Proto-symbol emergence. In Interna-

tional Conference on Robotics and Automation, vol-

ume 2, pages 1968-1974.

Paquier, W. and Chatila, R. (2002). An architecture for

robot learning. In Intelligent Autonomous Systems,

pages 575-578.

Tijsseling, A. and Berthouze, L. (2001). A neural

network for temporal sequential information. In

Proceedings of the 8th International Conference on

Neural Information Processing, Shanghai (China),

pages 14-18.

More intriguing information

1. A Rational Analysis of Alternating Search and Reflection Strategies in Problem Solving2. The name is absent

3. The name is absent

4. The name is absent

5. A methodological approach in order to support decision-makers when defining Mobility and Transportation Politics

6. The name is absent

7. The name is absent

8. The name is absent

9. PER UNIT COSTS TO OWN AND OPERATE FARM MACHINERY

10. Multimedia as a Cognitive Tool