model, an agent can learn how to use its innate mo-

tor skills to achieve a particular demonstrated task.

Thus, the ability to recognise and reproduce actions

can develop from innate skills through social situ-

atedness. Wo will demonstrate the implementation

of this system on two sots of experiments, a simu-

lated humanoid robot learning to pick up a glass,

and a physical robot learning from a human to fol-

low walls. Wo have also implemented the latter in

simulation (Marom ot al., 2002) but do not include

it in this paper duo to space considerations.

4. Object-Interactions Experiment

The experiment presented in this section involves

two elevon degrees of freedom simulated humanoid

robots (waist upwards), a demonstrator and an im-

itator, interacting with an object. Each robot has

throe degrees of freedom at the nock, throe at each

shoulder, and one at each elbow. The robots are

allowed to interact with one object each. The ob-

jects are identical and have six degrees of freedom,

i.e. they can move in any position and orientation in

3D space. The dynamics of each robot are simulated

in DynaMochs (McMillan ct al., 1995), a collection

of C++ libraries that simulate the physics involved

with objects and joint control. The torque for the

control of each joint, i.e. the input to DynaMochs,

is calculated with the aid of a Proportional-Integral-

Dorivativo (PID) controller, which converts postu-

ral targets (i.e. via points for each joint) into such

torque values.

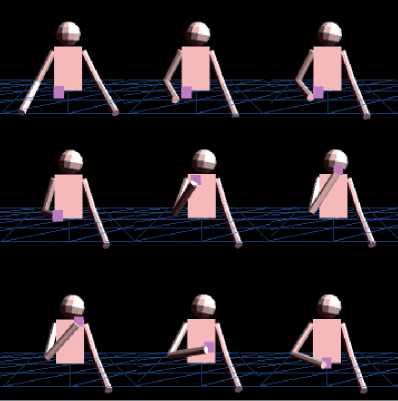

The demonstrator is controlled by a sequence of

such postural targets to interact with its object,

which is lying on a surface at waist level. The pos-

tural targets control the demonstrator to ‘grasp’ the

object, pick it up, ‘drink’ its hypothetical contents,

and then put it back on the surface (seo Figure 2).

The absence of fingers, however, as well as software

limitations load to a rather crude robot-object inter-

action: namely the object is merely attached to (or

detached from) the wrist, as long as this is desired

and the wrist is close enough, i.e. the object is

weightloss.

The system described in the previous section is

used to model and control the perceptual-motor skill

of the imitator, the skills of the demonstrator are

hand-crafted and do not change; this is the case for

all the experiments reported in this paper. In this

experiment, the input to the system comes from a

crude approximation to visual perception which con-

sists of the joint angles of the observed demonstra-

tor (11 degrees of freedom), plus their corresponding

joint velocities (another 11), plus the position and

orientation information of the observed object (6 de-

grees of freedom), plus their corresponding velocities

(another 6) — 34 dimensions in total, whore noise

is also added to each. Similarly, the proprioception

Figure 2: A sequence of snapshots of the demonstrated

behaviour; left to right, top to bottom.

of the imitator is approximated as explicit access to

the noisy version of its own joint-angles and joint-

velocities.

Notice that the imitator perceives the demonstra-

tor in the same way it perceives itself, and thus the

imitator can directly represent what it is trying to

imitate, as described in Section 2. In other words,

the perceived input can bo directly stored as targets

to bo achieved by the motor system (via the inverse

model).

Hero the inverse model consists of 2 parts: (1) the

PID controller is used for posture control: targets

are passed into the PID controller which together

with proprioceptive feedback calculates the required

torque (or motor commands) of each limb; (2) a sot

of boundary conditions for object-interactions, which

specify when the wrist is close enough to pick up the

glass, when the wrist/glass is close enough to the

mouth to ‘drink’, and when the wrist∕glass is close

enough to the table to put down the glass (those

boundary conditions are sot to a radius of approxi-

mately 4 cm from the centre of the glass, mouth, and

table).

To summarise, the stimulus in this experiment is a

34-dimonsional vector that represents the imitator’s

perception of the demonstrator and the object, and

the motor commands calculated by the inverse model

are used to control each of the imitator’s limbs, and

the object-interaction mechanism.

4-1 Learning & Recall

In this experiment, during the learning phase the im-

itator merely observes the demonstrator, analysing

the visual perception of what the demonstrator is do-

ing by training its SOFM; it doos not try to replicate

More intriguing information

1. Peer Reviewed, Open Access, Free2. Fiscal Sustainability Across Government Tiers

3. LOCAL CONTROL AND IMPROVEMENT OF COMMUNITY SERVICE

4. The economic doctrines in the wine trade and wine production sectors: the case of Bastiat and the Port wine sector: 1850-1908

5. The Tangible Contribution of R&D Spending Foreign-Owned Plants to a Host Region: a Plant Level Study of the Irish Manufacturing Sector (1980-1996)

6. The name is absent

7. A MARKOVIAN APPROXIMATED SOLUTION TO A PORTFOLIO MANAGEMENT PROBLEM

8. IMPACTS OF EPA DAIRY WASTE REGULATIONS ON FARM PROFITABILITY

9. The name is absent

10. PER UNIT COSTS TO OWN AND OPERATE FARM MACHINERY