W. K. Hardle, R. A. Moro, and D. Schafer

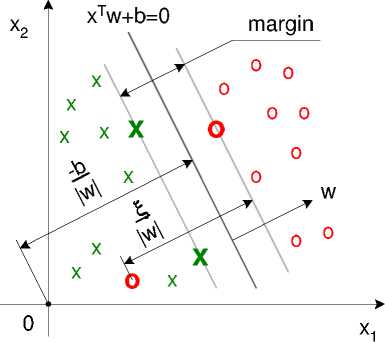

Fig. 4. The separating hyperplane xτw + b = 0 and the margin in a non-separable

case. The observations marked with bold crosses and zeros are support vectors. The

hyperplanes bounding the margin zone equidistant from the separating hyperplane

are represented as xτw + b = 1 and xτw + b = -1.

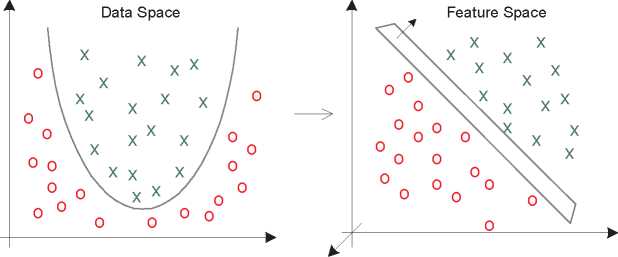

Fig. 5. Mapping from a two-dimensional data space into a three-dimensional space

of features R2 → R3 using a quadratic kernel function K(xi, xj) = (xiτxj)2. The

three features correspond to the three components of a quadratic form: x1 = x21, x2 =

√2x1x2 and X3 = x2, thus, the transformation is Ψ(x1, x2) = (x2, vz2x1x2, x2)τ. The

data separable in the data space with a quadratic function will be separable in the

feature space with a linear function. A non-linear SVM in the data space is equivalent

to a linear SVM in the feature space. The number of features will grow fast with d

and the degree of the polynomial kernel p, which equals 2 in our example, making

the closed-form representation of Ψ such as here practically impossible

More intriguing information

1. The name is absent2. The Environmental Kuznets Curve Under a New framework: Role of Social Capital in Water Pollution

3. Valuing Farm Financial Information

4. The name is absent

5. The name is absent

6. Internationalization of Universities as Internationalization of Bildung

7. THE WELFARE EFFECTS OF CONSUMING A CANCER PREVENTION DIET

8. Quality Enhancement for E-Learning Courses: The Role of Student Feedback

9. Towards Learning Affective Body Gesture

10. The name is absent