Learning and Nonlinear Models - Revista da Sociedade Brasileira de Redes Neurais, Vol. 1, No. 2, pp. 122-130, 2003

© Sociedade Brasileira de Redes Neurais

where each output yj is connected to the input xi with a weight wji. Comparing this expression with equation (8), allows one to

rewrite the backprojections in terms of synaptic weights where each pixel in the final image are fully connected by weights wij

to all pixels of q(s,θ). Index j enumerates all M pixels in the final image f(r,θ) while index i enumerates all N pixels in q(s,q):

1N1N

f ( rφ φ) = yj = ∑ wiX = ∑ wiiq (s, θ)

Ki=1 Ki=1

(10)

Note that some weights will be unity and others will be zero. To reduce memory usage and improve speed the null weights can

be purged yielding a partially connected neural network.

Equation (8) can be slightly modified to take into account the fan-beam geometry (4 ) [8]:

1 K 1 r cos(βi- φ)

f (r,φ = K ∑ W q (—U—β

(11)

The neural network can be easily modified to incorporate this new scenario. The only change needed is on the weights

values. Instead of unity their value should be given by

wji = U-2

(12)

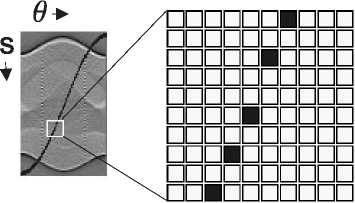

Figure 6: Zoom of part of the sinusoid, showing the points calculated according to the equation 4.

Another refinement to be incorporated in the reconstruction network is interpolation. When discretizing the calculated

value of s which is a function of the angle θ as expressed in (4) the nearest pixel is selected. This way only a few relevant

pixels in q(s,θ) will contribute to a given pixel in the final image. Figure 6 shows a zoomed view of the sinusoid relation in

q(s,θ) where significant space between adjacent pixels can be seen. To improve image quality, we use the interpolation method

[11,12] to make a good use of neighboring pixels. The procedure to obtain a linear interpolation is to compute a weighted

combination of the two adjacent pixels according to proximity of them to the sinusoid. Figure 7 illustrates the adjacent pixels

incorporated. Backprojection is then given by:

1K

f (r, φ) = — ∑ [Uk q(_ s J, θ ) + vkq(P s 1, θ )]

K k =1

(13)

where the calculated value of s is truncated downwards _sJ (floor of s) to the closest number in the discretized s axis, or

truncated upward Ps1 (ceil of s).

The weights ui and vi are calculated by

ui =P s 1 - s = P s∣ - r cos(θi - φ)

and

vi = s - _sJ = r cos(θi - φ) - _s J

(14)

(15)

126

More intriguing information

1. Strategic Effects and Incentives in Multi-issue Bargaining Games2. EMU: some unanswered questions

3. The Institutional Determinants of Bilateral Trade Patterns

4. Fertility in Developing Countries

5. From Communication to Presence: Cognition, Emotions and Culture towards the Ultimate Communicative Experience. Festschrift in honor of Luigi Anolli

6. The name is absent

7. The InnoRegio-program: a new way to promote regional innovation networks - empirical results of the complementary research -

8. The name is absent

9. The name is absent

10. XML PUBLISHING SOLUTIONS FOR A COMPANY